What is this flow?

There is a flow in the way that software is developed and tested no matter how you manage your projects. Things typically start from some sort of requirement type of work item that describes the business problem and what the client desires to do and should include some benefit that the client would receive if this was implemented. Yea I just described the basics of a user story which is where we should all be by now when it comes to software development. The developers and testers and whoever else might be contributing to making this work item a reality start breaking down this requirement type into tasks that they are going to work on to make it happen.

The developers get to work as they start writing the code and completing their tasks while the testers start writing test cases that they will use to either prove that the new requirement is working as planned or if it has not and simply is not working. These test cases would all go into a test plan that would represent the current release that you are working on. As the developers complete their coding the testers will start testing and any test cases that are not passing is going to go back to the developers for re-work. Now how this is managed is going to depend on how the teams are structured. Typically in a scrum team where you have developers and testers on the same team this would be a conversation and the developer might just add more tasks because this is work that got missed. In some situations where the flow between developers and testers is still a separate hand off, a hold out from the waterfall days, then a bug might be issued that goes back to the developers and you follow that through to completion.

As the work items move from the business to the developers they become Active. When the developers are code complete the work items should become resolved and as the testers confirm that the code is working properly they become closed. Any time that the work item is not really resolved (developer wishful thinking) the state would move back to Active. In TFS (Team Foundation Server) there is an out of the box report called Reactivations which keeps track of the work items that moved from resolved or closed back to active. This is the first sign that there are some serious communication problems going on between development and test.

With all the Requirements and Bugs Closed How will I know what to test?

This is where I find many teams start to get a little weird and over complicate their work flows. I have seen far to many clients take the approach of having additional states that say where the bug is by including the environment that they are testing it in. For instance they might have something that says Ready for System Testing or Ready for UAT and so on. Initially this might sound sensible and the right thing to do. However, I am here to tell you that this is not beneficial, and loses the purpose of the states and this work flow is going to drown you in the amount of work that it takes to manage this. Let me tell you why.

Think of the state as a control on how developed that requirement or bug is. For instance it would start off as New or Proposed, depending on your Process template, from there we approve it by changing the state to approved or active. Those that use active in their work flow don’t start working on it until it is moved into the current iteration. The process that moves it to approved also moves it into a current iterationn to start working on it but they then move the state to committed when they start working on it. At code completion the active ones go to resolved where the testers will then begin their testing and if satisfied will close the work item. In the committed group they always work very close to the testers who have been testing all along here so when the test cases are passing then the work item moves to done. The work on these work items are done, so what happens next is that we start moving this build that represents all the work that has been completed and move it through the release pipeline. Are you with me so far?

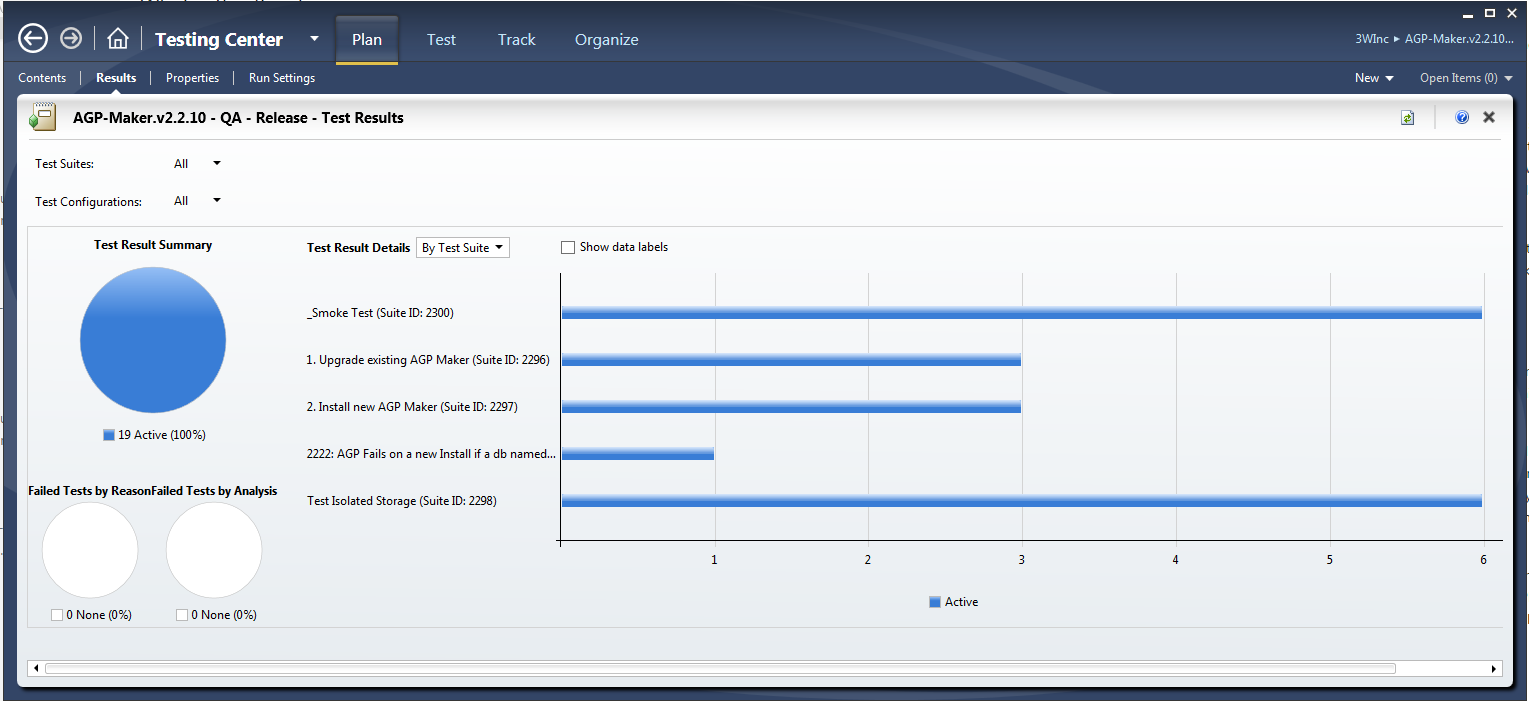

This is where I typically hear confusion, as the next question is usually something like this: If all the requirement and bug types have been closed how do we know what to test? The test plan of course, this should be the report that tells you what state that these builds are in. It should be from this one report, the results of the test plan that we base our approvals for the build to move onto the next environment and eventually to production. Let the Test Plan Tell the Story. From the test plan we can not only see how the current functionality is working and matches our expectations but there should also be a certain amount of regression testing going on to make sure features that have worked in the past are still working. We get all that information from this one single report, the test plan.

The Test Impact Report

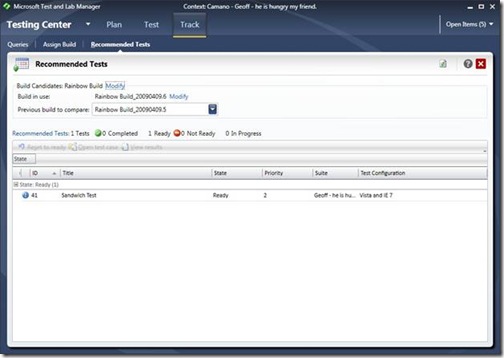

As we test the various builds throughout the current iteration as new requirements are completed and bugs fixed the testers are running those test cases to verify that this work truly is completed. If you have been using the Microsoft Test Manager (MTM) and this is a dot net application, you have turned on the test impact instrumentation through the test settings we have the added benefit of the Test Impact Report. In MTM as you update the build that you are testing it does a comparison to the previous build and what has been tested before. When it detects that some code has changed near the code that we previously tested and probably passed it is going to include those test cases in the test impact report as tests that you might want to rerun just to make sure that the changes that were made do not affect your passed tests.

The end result is that we have a test plan that tells the story on the quality of the code written in this iteration and specifically lists the build that we might want to consider to push into production.